Agentic AI and the new security mandate: How organizations must redesign trust, governance, and autonomy

Agentic AI is the first technology forcing organizations to rethink not just workflows, but judgment.

When decisions can be automated, the transformation isn’t merely operational, it’s structural.

These systems are redefining how trust is established, how governance is interpreted, and how security must react in real time. The question is no longer “What can AI do?” but “What should AI be allowed to do?” That shift is already rewriting the foundations of modern security and compliance.

Our recent webinar: Supercharging Security & Compliance with Agentic AI, brought together experts from Kustos and Assurant to unpack what it really takes to operationalize agentic systems in regulated, high-stakes environments. Their collective viewpoint was:

Agentic AI is not a toolset upgrade. It is a structural shift in how security, governance, and digital operations coexist.

For many CIOs and CISOs, the first question is familiar: How can AI improve security? Can it detect threats faster, reduce audit fatigue, tighten identity controls, or enhance how we manage compliance across rapidly evolving environments?

The second question is the harder one, and the one that genuinely tests organizational readiness: How do we secure AI itself? How do we ensure agentic systems don’t leak sensitive data, act without authorization, or become vulnerable to prompt-injection or model-poisoning attacks? And how do we maintain the same level of rigor we demand from human decision-making?

What emerged from the discussion is that these two questions can no longer be treated separately. AI’s promise is undeniable, but so is its risk surface. Real-world incidents already show employees inadvertently feeding sensitive data into unmanaged LLMs, while machine-to-machine interactions introduce exposure points legacy security models were never designed to interpret.

During the webinar, 61 percent of attendees said AI should not enforce compliance without human oversight. A sentiment that echoes across industries. Autonomy without governance will not scale. The advantage now belongs to organizations that adopt, govern, and secure AI simultaneously, rather than treating these capabilities as sequential steps.

A defining theme was the distinction between yesterday’s AI systems and today’s agentic architectures. Traditional AI: copilots, chatbots, prompt-response interfaces waited for instructions. You asked; they answered. The interaction stopped there.

Agentic AI behaves differently. It interprets goals instead of commands, breaks down tasks independently, chooses tools, triggers workflows, and sequences decisions. In many cases, its behavior mirrors that of a junior analyst or engineer capable of chaining actions without direct supervision.

This is where the promise and risk converge. Once an AI system can act, the line between assistance and autonomy becomes profoundly important. One panelist captured this elegantly when describing the need to preserve the “soul” of critical decisions, the parts of judgment that cannot be outsourced.

Where do you draw the line? What's the soul you keep, and what do you automate? It depends on context, not generic rules. - Joshua Danielson, Founder &CEO, Kustos

This philosophy aligns closely with the VRIZE perspective: agentic AI is at its best when it amplifies human judgment, not replaces it. Organizations that define this boundary with intention can embrace autonomy while maintaining authority over decisions that carry strategic or regulatory consequences. The future is not about choosing between autonomy and oversight; it is about designing a model in which both coexist.

Despite all the noise around AI, our conversation stayed grounded in practical, high-impact ways organizations are already leveraging agentic systems.

Security failures are most expensive when caught at the end of the development cycle. Agentic AI shifts this dynamic by embedding intelligence directly into the pipeline. These systems analyze coding standards, regulatory policies, and architecture guidelines in context, identifying misconfigurations or privacy concerns not just by pattern, but by understanding intent and data sensitivity. The result is a move from reactive fixing to proactive assurance.

Incident response has always demanded speed, context, and precision — qualities agentic AI is naturally suited for. Instead of automating simple playbooks as traditional SOAR platforms do, Agentic AI can compare signals, correlate events, cluster suspicious behaviors, and recommend tailored actions. It can help eliminate noise while surfacing genuinely meaningful threats.

But for actions with large blast radiuses — credential resets, cross-region containment, regulator notifications, human oversight remains non-negotiable. The emerging model is clear: AI handles the work; humans handle the consequences.

Third-party risk and GRC functions are uniquely burdened by manual work, which includes gathering evidence, completing questionnaires, responding to audits, and reviewing contractual policies.

In this scenario, Agentic AI introduces a new model of continuous compliance:

This reduces operational burden while improving accuracy and audit readiness.

A mature AI program views governance and security as interdependent. Governance guides how AI behaves ethically, legally, and responsibly. Security ensures the system withstands attack, protects data, and operates reliably.

Governance ensures our systems behave responsibly and remain explainable. Security ensures they remain safe, available, and resilient. You cannot succeed with one without the other - Shil Majumdar, Director of IT, Assurant

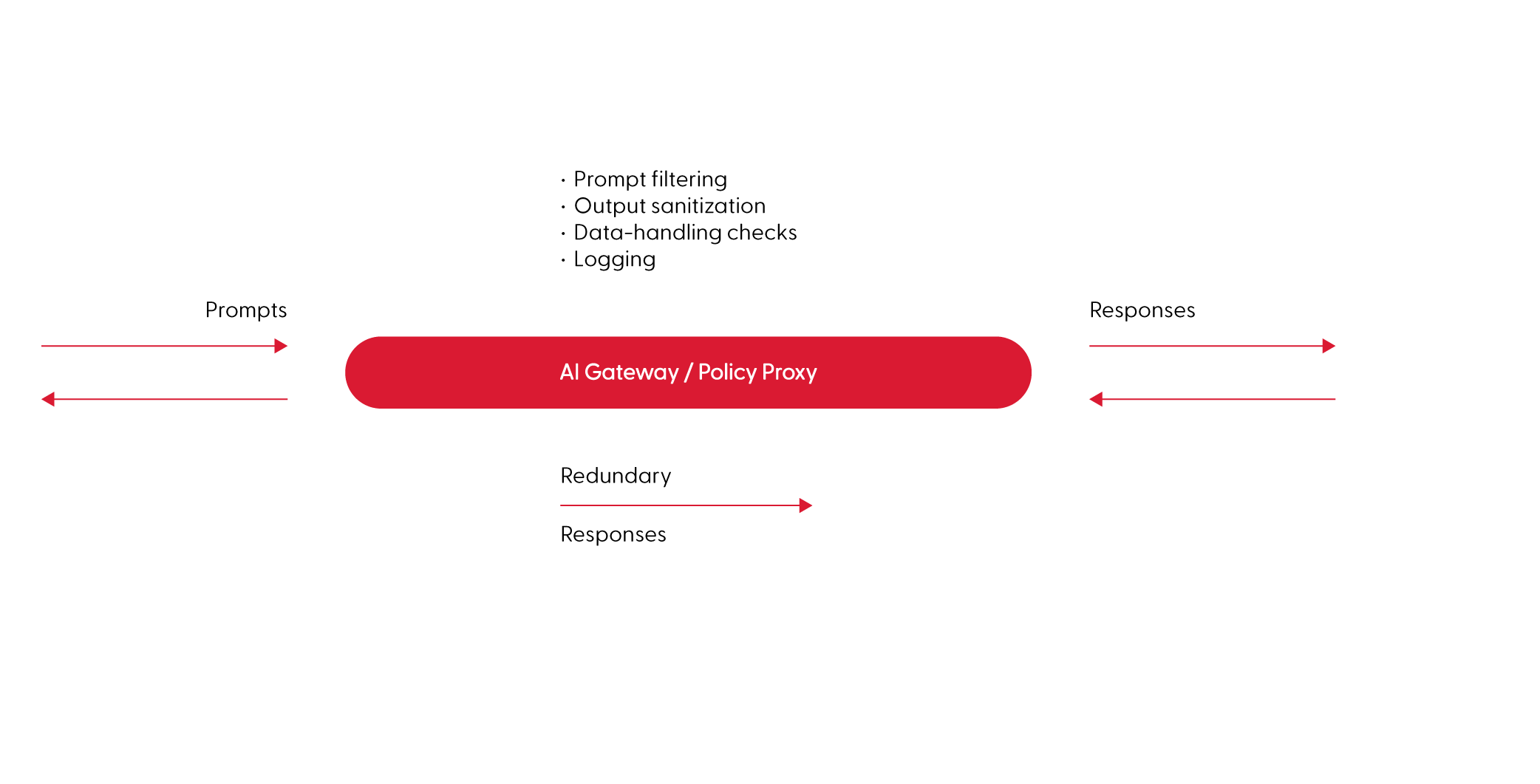

This balance becomes even more essential in an agentic environment where actions cascade across workflows. VRIZE’s own perspective, reflected in our whitepaper on Role of Ethics in AI , emphasizes that responsible behavior begins long before deployment. The webinar underscored the importance of introducing an AI Gateway or Policy Proxy, a centralized enforcement layer that intercepts all prompts, responses, and actions.

This gateway acts as a sentinel: filtering prompts, sanitizing outputs, validating data handling, and logging activity for investigation and audit. When combined with regulated browsers, identity governance, and strong application controls, this architecture forms the backbone of a secure AI ecosystem.

This architecture, paired with regulated browsers, application control, and strong identity governance, forms the foundation of a secure AI ecosystem.

Meanwhile, industry frameworks are also maturing rapidly. Organizations that primarily use AI can lean on emerging guidelines such as the NIST AI Risk Management Framework to structure their assurance model. Those building AI systems will increasingly rely on the secure AI frameworks and ensure that they do not grow faster than the organization’s ability to govern it.

One of the most frequent questions from the audience was whether agentic AI could eventually manage compliance without human involvement. For now, the answer is appropriate: not yet.

Trust must be earned through transparency, explainability, and predictable behavior over time. And with 54% of attendees admitting their organizations are experimenting with AI without a formal governance model, it’s clear that oversight cannot be optional.

Early deployments demand strong human-in-the-loop supervision, deep observability, and explicit guardrails. As maturity grows, the human role will evolve shifting from performing tasks to architecting the systems that determine how AI interprets policy and acts on it.

The discussion also addressed a critical question: Who is accountable when an agent makes a mistake? The answer is both simple and uncompromising. Organizations remain fully accountable. Agentic AI systems certainly amplify the accountability of the humans and institutions that deploy them, but these systems do not assume legal liability. This notion placed a renewed emphasis on:

For those early in their AI journey, the panelists highlighted a simple progression:

Agentic AI is not a passing trend. It is becoming part of the operational fabric of modern enterprises, similar to the shift that accompanied cloud computing. Early adopters will build a meaningful advantage, while laggards risk widening gaps.

The opportunity ahead is profound: security and compliance functions that are anticipatory, more continuous, and more deeply integrated into the fabric of the enterprise. But responsibility is equally significant. AI must be governed with intention, engineered with discipline, and deployed with respect for both risk and potential.

The organizations that strike this balance, where curiosity is paired with controls, and innovation is matched with governance, will not merely adopt agentic AI. They will elevate how the enterprise earns trust, protects value, and operates securely on scale.

Because in the era of agentic intelligence, trust isn’t a feature. It’s the strategy.